Peer Learning is...

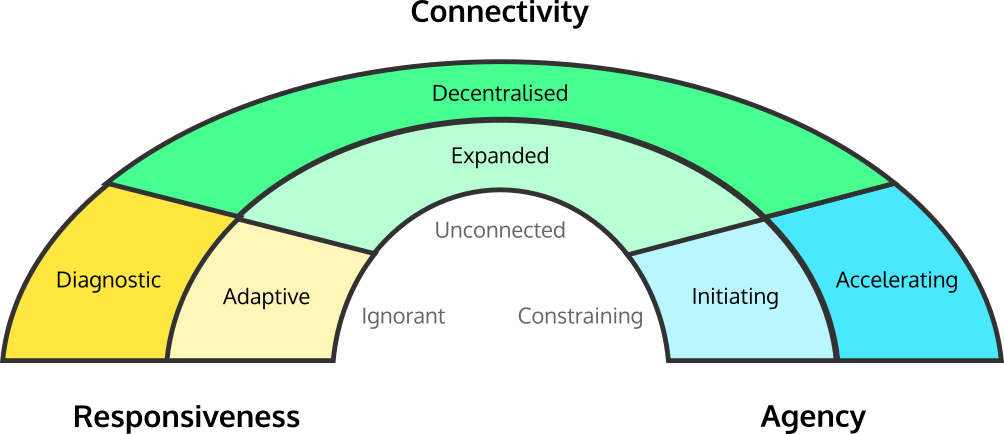

The ARC Model - evaluating peer learning environments

Whether you're looking to change formal educational settings, work meetings, workshops at startup accelerators, or groups sharing their craft, there is always the temptation to "just make things more interactive". But this is a patch that masks underlying problems. It doesn't fix the program. The intended learning still doesn't happen.

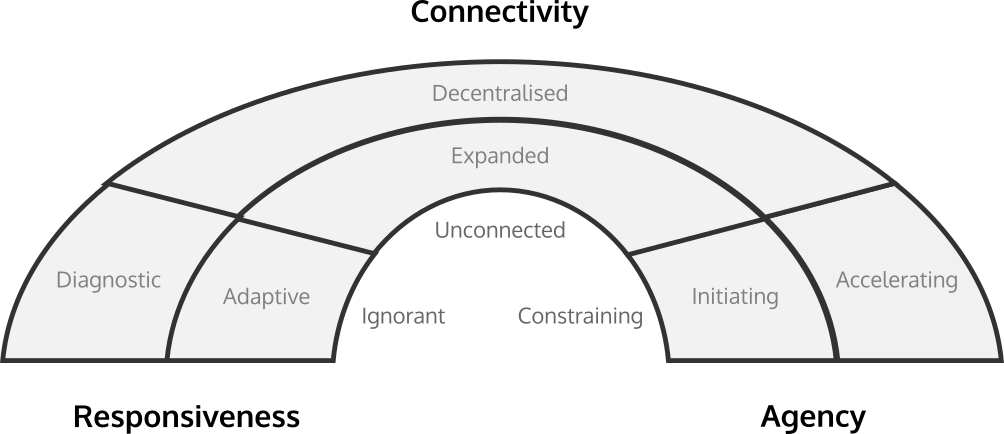

To help educators dive in, and see where to start working on a program we've created an evaluation tool for the effectiveness of a learning environment. The tool facilitates making well-founded choices for what to do next.

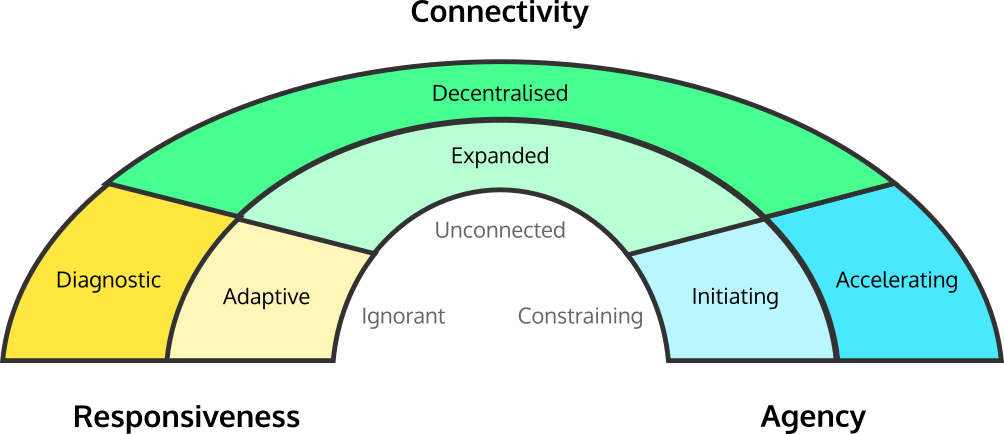

The ARC is based on the 3 characteristics of Peer Learning Programs: Agency, Responsiveness and Connectivity. Here's a quick recap:

Agency — Invocation of self-direction in learners.

Agency evolves from a push by the educator to shape the learner’s mindset, initiating them. Then, it shifts into a supporting role, where it accelerates their agency to accelerate them in the directions they choose.

Responsiveness — A learning environment's ability to systematically assess learners' needs and to provide relevant knowledge on demand.

Programs start by becoming more responsive when the education content is adaptive to learners’ needs. Ultimately, responsiveness takes form as a diagnosis through mentoring conversations with the learner.

Connectivity - Access provided to specific knowledge sources, especially those beyond the learner’s network.

This starts with brokering of connections on the learner’s behalf to expand their reach, and evolves into creating decentralised environments where they can make those connections for themselves.

These are interrelated

Systematically connecting learners to others on their path, only makes sense when they have a path. That means they have Agency within their learning environment, but also that the program itself is designed to listen and respond. So Connectivity depends on a foundation of Agency and Responsiveness.

By assessing an education program on each of these characteristics, it helps us see if we’re going through the motions without effect.

Responsiveness is always a good place to start an evaluation. It is a telling part of the assessment showing how a program tailors to the learner, and responds as they progress. Completing the evaluation from there, by going through the other characteristics will make the assessment fall into place. As a whole the evaluation helps to determine if relevant learning actually takes place, and if not, how, and where to fix it.

To demonstrate how the ARC helps us spot these situations, let’s use it to evaluate a few different educational formats:

Lecture programs

Lectures and lecture series' are like riding a train. Sit back, get comfy, and cast your gaze out the window. The journey is set; let's hope it's interesting.

Lecture-based programs excel at topics where the learner needs little input into the direction of their learning journey. Lectures typically don’t sense learners’ needs or adapt to them, connect them to other experts than the lecturers, and give little or no agency to the learner regarding the topics.

For topics and disciplines that don’t change much, the repetitiveness of this form let's lecturers improve their delivery through practice, and polish their content over time.

People tune out when lectures don't feel relevant. This is the cause behind lecturers concerns about creating engagement in their classes.

When classroom approaches include interactivity, experiential learning and discussion, they help stimulate the learner and make the learning experience more effective, but everyone is still on the same train on the same track. The curriculum is set.

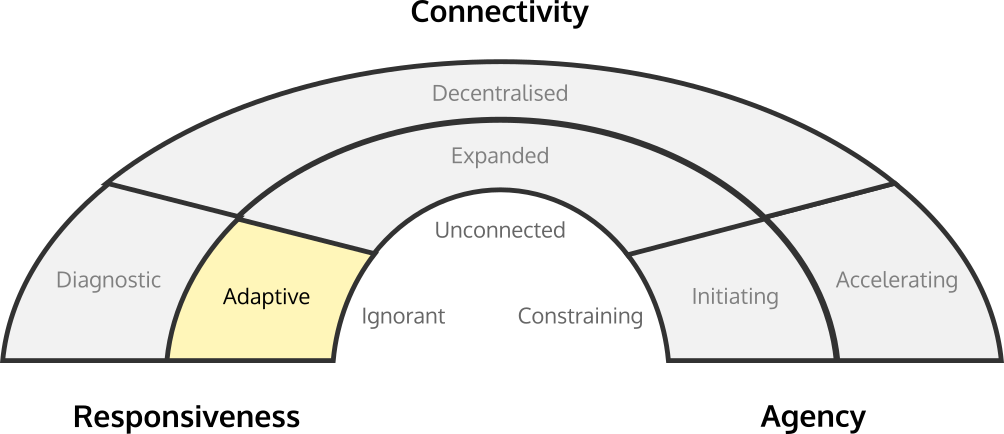

When lecturers start by asking the students questions - either about what they want to learn today, or assessing them with a show of hands - the educator is reforming the plan according to learner needs. This is a form of adaptiveness, so on the ARC, we can rate Responsiveness at Level 1 (Adaptive) in these cases.

However, lectures don’t enable learners to decide how they would like to achieve their learning goals, so we rate them at Level 0 (Constraining) for Agency. They also don’t involve other experts to address these needs, so we evaluate them as Unconnected — Level 0 on the Connectivity scale.

We can see why even great lectures leave something to be desired from the learners perspective. We can also how to improve them. Quick wins lie in becoming more responsive; what about starting with a session where learners share their biggest questions about the upcoming topic? Those reveal struggles and issues up-front, so lectures know where to detour or slow down in advance. Or, if the class is too big for that, quick online survey to participants before the lecture can give similar insight. If a few students have relevant projects, why not bring them to the podium and work with them or coach them, for all to benefit?

All these types of actions will help the lecturer to collect an impression of what’s on the mind of learners as a form of preparation, and put emphasis on those particular topics.

Panel discussions

The idea behind the panel discussion is to get a good conversation going between experts, to flush out the most relevant topics of the day. The reality is that panels tend towards being a set of small talks, with each panellist pushing a pre-determined agenda. A lot depends on the preparation of the moderator, but their research tends to be on the panelists and not on the audience, so their interjections risk feeling irrelevant to the crowd. For all of their potential, and all the hard work and good intentions that go into them, panel discussions at conferences tend to get most scorching commentary on social media and other backchannels. Let's look at why.

Many panel moderators don’t like to involve the audience, and they have good reason. Audience questions are often irrelevant, or they “like to state a point related to the topic, rather than asking a question”. Audience involvement is seen mostly as a distraction, and because of it, panel discussions restrict learner participation.

Let’s look at panels from the learners perspective. Panel discussions do tend to promise interesting topics from experts worth learning from. Learners can choose which panels to attend. So there's an attractive potential of relevance and agency to live up to.

But the panel format leaves learners powerless to express themselves, or direct the discussion to something meaningful to them.

This is frustrating for learners. So when it’s question time, both the frustration and the built-up pressure to try to learn something relevant, turn Q&A’s into a different phenomenon - a spouting pressure release for unanswered questions and self-expression. The problem isn’t the audience, it’s that they haven’t been given a voice except for a short question right at the end, but by then the panel topics have diverged far from the what's relevant to the audience.

It's frustrating for panellists too. They’ve been put in a format that appears to be conversational, but is actually quite slow and constraining. When panels get boring, a typical reaction is to turn them into a debate to create some dynamism and energy. But that can lead to a battle of generalisations, where panelists assume different contexts or get forced to defend straw-man arguments.

How do panels score on the ARC? Well, they fail to make a mark on Connectivity, because they don’t facilitate the creation of relationships with the learners (Level 0, sorry).

Do the learners have the opportunity to specify the topics of discussion, other than asking a question? Does the learner have any say in how to interact with the expert? No to both, hence zero’s all around for Responsiveness (Ignorant), and Agency (Constraining).

Targeted calibration on questions that learners have create more engagement. Could you find specific challenges amongst them to be discussed by the panel?

A few conferences flip the panel discussion into a "Positive Shark Tank" where a few interesting learners' challenges are presented to them, and panelists then compete or build on each others' advice.

Others take questions early, and a moderator picks themes from them to direct the discussion.

Local people in the audience understand local needs. Novices in the audience understand novice needs. Putting them in the mix creates unity amongst the interests of all participants, and provides a more constructive learning context overall.

Panels will benefit a lot by improving Responsiveness - identifying and including representatives of groups in the audience, by starting with calibrating with the audience, or by using the panel as an opportunity to diagnose peer learners. These steps make them far more relevant, rewarding and engaging.

Large conferences

If you’ve ever had the opportunity to experience a conference of thousands or tens of thousands of people, you’ve probably left with a sense of awe or excitement. Large conferences are a unique type of learning experience because of who you can meet. They attract a broad, diverse group of people with some common interest. The potential is in the people you meet, who you’d probably never discover otherwise.

In order to deliver on the promise of bringing people together, conference organisers need to attract a crowd. The way they draw in the crowds is by committing big name speakers to the event.

For the organisers, The success of a conference is usually fragile. If the talks are bad, the participant feedback for the conference as a whole will reflect that. So best include more talks so there are more good ones. If a lot people leave without making great connections, the feedback will reflect that too.

Conferences hold a promise of connections, and the more responsive they are, the more consistently they do this. The freedom to shape the agenda is an issue. How can you give more control to the participant to shape their own program at the conference? The more people can choose the banners they congregate under, the more the environment helps them meet relevant new people. The ARC reveals where this risk to success of a conference comes from.

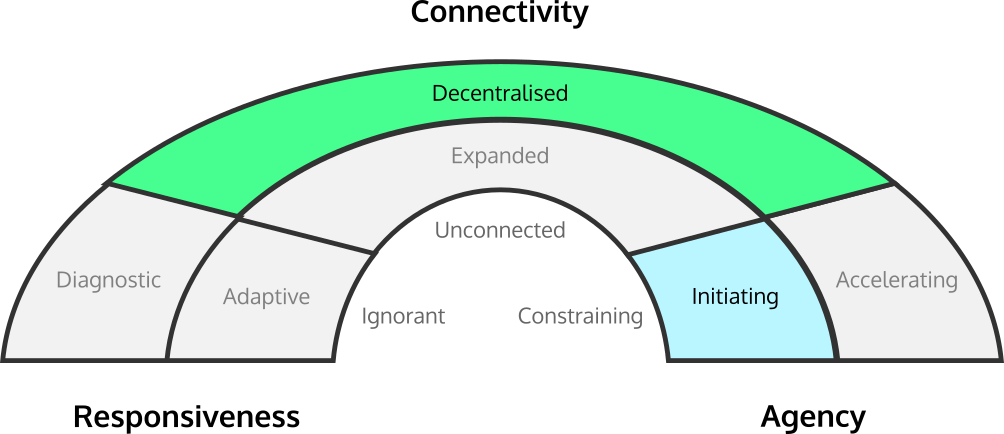

Because so many people with common interests and challenges are drawn together, the Connectivity potential of a large conference is Decentralised, with a maximum rating of 2 — there could be almost no delay for the learners to find highly relevant people to their specific learning goals, and no need for them to wait for an organiser or facilitator to make those connections individually. But, because conferences are designed around the speakers, this is where misalignment with the learners’ needs happens.

Conference schedules are usually heavy on talks, and light on social interaction. The physical space also induces people to find a seat in a dark lecture hall, listening to a speaker, not engaging with like-minded people. As a result, peoples’ time allocation for the conference is imposed on them.

The content for the talks themselves are also set by the individual speaker, not the learners. This centralised control over the setup of the program make Large Conferences ignorant of learners' needs (Responsiveness Level 0).

Large Conferences enabling agency in learners — to choose which talks to attend (or not). They don’t allow the learner to specify, direct topics, but still push the learner to curate their own learning in some way. (Agency Level 1: Initiating)

So the promise of Connectivity is not supported by a foundation. The paradox of the conference is that all the talks actually prevent people from meeting.

So while conferences tend to inspire and initiate agency, for most, the value of the network around them is lost because it's the environment isn't designed to enable access to them.

There are conferences that, by design, provide a way for people to share their agendas, those that don't cater to speakers who want to give the same talks everywhere, and those that loosely structure social interactions around common interests. Those conferences provide some form of adaptiveness for the learners, and can expand their network meaningfully. But those are the exception.

A simple, effective starting point is for conferences to encourage shorter talks and make it convenient for speakers to prepare their slides at the last minute. They're usually not being lazy, but using the time to understand who'll be in their audience. Another simple change is to light the audience, encouraging the speaker to interact, or at least respond to facial expressions in the crowd.

Still, these only go so far, and for the full foundation to be built, allowing for instant connectivity for everyone present - that requires, using the extensive knowledge among the experts to diagnose the needs of others, and for the learners with active agency in their own work to have control over at least part of the conference agenda.

Rather than fewer, bigger, darker rooms, conferences that shift towards more, smaller, comfortable rooms take big steps towards this. Those types of conferences naturally allow experts to become known, diagnose the needs in the room, and for peers to find each other.

Retreats

Over the last decade, the conference business in many topics has shifted towards mega-conferences that compete on sheer size, rather than content or quality. A response to that is the emergence of smaller retreats, where a group of less than 20 people are chosen to meet in a comfortable, secluded environment for a few days.

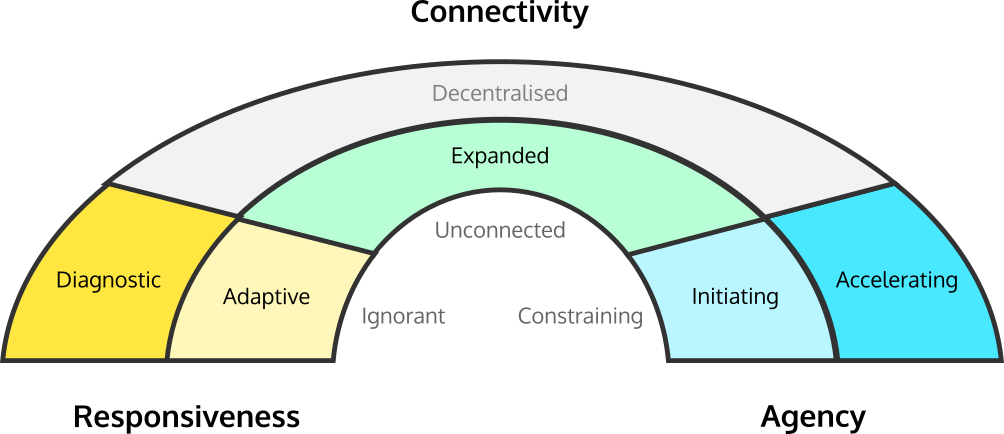

Retreats are usually quite unstructured, allowing the participants to bring their own agendas and start meaningful conversations. They are good examples of creating environments that build on Agency. If you’re invited to a retreat, you’re expected to be self-directing and proactively make use of the opportunity. That’s why they rate a level 2 (Accelerating) on Agency.

Being loose on structure has its benefits, but it also comes with problems. Retreats rarely include systemic opportunities for needs to be expressed. With the most pressing needs unsaid, a lot of time is wasted in awkward social interactions and days of “getting to know you” before the deeper knowledge exchanges take place.

Retreats suffer when little effort is made to ensure that the group itself is selected so that each participant meets the most relevant people possible, as early as possible. Retreats with no built-in way for participants to share their goals, then rate as Ignorant (Level 0) on the Responsiveness scale.

But if the host takes the time to understand and diagnose each participant beforehand, they’ll be able to start everyone off with curated introductions — that’d rate as Diagnostic Responsiveness, level 2.

What talents do your retreat participants have that are relevant to the rest of the group? How can you use that talent to increase connectivity? A minimal amount of effort is required for a retreat organiser to select people who will gain from each other, so a level 1 (Expending) for Connectivity.

A first intervention to improve a retreat program is to perform active curation to balance the mix of participants with a diversity of perspectives, and experiences. The mix of people then becomes the ingredients for the learner to successfully achieve her outcomes.

To improve Responsiveness, retreat hosts can help participants open up to each other. This isn’t just about stating clear, logical learning goals. For people to open up and share their dreams and fears, they have to feel safe and welcome among their peers, in an empathic atmosphere and a familiar culture. This way, it will take less time, and social awkwardness to understand how to start meaningful exchanges with another participant.

Lastly, the implicit rule of a “retreat” is often to relax and socialise only. But if a participant has a rare, face-to-face without someone who can really help them, then rolling up their sleeves together on “some work” might be what’s badly needed. Creating an environment that enables this to happen, both practically and socially, provides a systematic deep response to those critical learning opportunities.

Workshops

Workshops tend to address a smaller audience than lectures, which allows the instructor to interact more. Well-delivered workshops feel more like getting things done than "just learning". The teacher can ask questions, check in, adapt a bit. The learners questions improve because they’re prompted from their experience, putting their learning into their context.

Experienced workshop instructors usually start by checking in with their students, so they can adjust it to the right level of advancement.

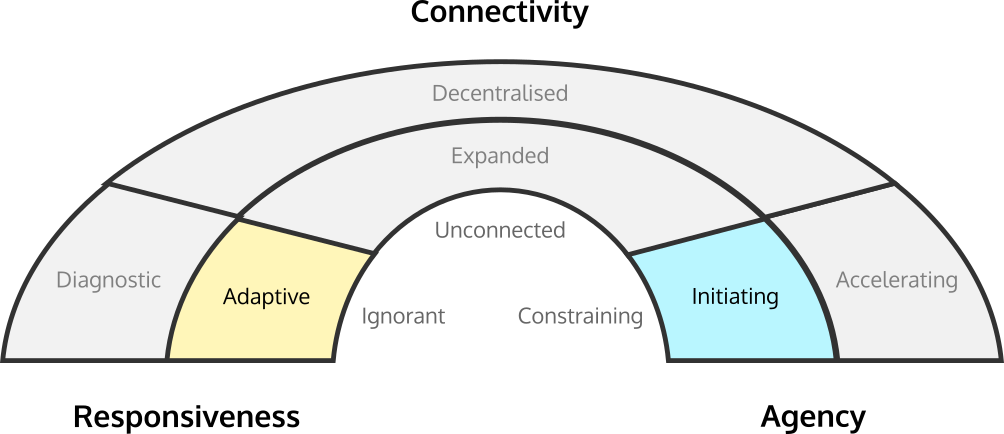

So how do workshops score as a peer learning format? Workshops are a great example of the power of Responsiveness. Sometimes, workshops start with a clear question: “what would you like to learn today?”. Other times, it’s more subtle, as the teacher progresses, they ask questions, “Have you heard of this? Has anyone done this before?”.

These dynamics make workshops to be quite responsive. There is time, and space to delve into specific questions that a learner might have. Responsiveness of workshops is generally at Level 1 (Adaptive) because the learners can request topics or changes in pace, even if their needs aren’t diagnosed.

What would happen if learners started requesting the specific content that they needed, as they need it? When workshops are planned on a pre-set path, they don't allow for learners with agency. Their questions can create useful detours, but the content still finds its way back to a preset path, which is outside of the control of the learners.

Workshops build confidence in learners and enable them to become self-directed. They enable Agency, so rate 1 (Initiating) there. There's only one source of knowledge, the instructor, so Connectivity is at Level 0: unconnected.

Common interventions to improve workshops are to increase Responsiveness by being on the look-out for topics that emerge within a group of learners. Ensuring that the workshops address those topics means that those topics address the learners goals at right time when the learners actually need those answers.

Some workshops rely on active agency, recognising the opportunity for an expert practitioner to just work with a learner. “Show & Tells” are an example of this style of workshop, where learners present their project to the class, followed by the workshop expert working with them on it. These expose useful tips, ways of working and attitudes to work in practice — none of which are visible in the artificial exercises that usually get planned. This approach also moves the responsiveness to diagnostic, since the instructor goes deep with learners on their projects.

Startup Accelerators

Startup accelerators typically support a set of startups with similar goals and a similar stage. They lay out a 3-month program that includes a pre-defined educational path with workshops on general startup topics like raising investment, product design, learning from customers, and marketing.

Accelerators also provide a group of mentors, who support the startups with advice and connections.

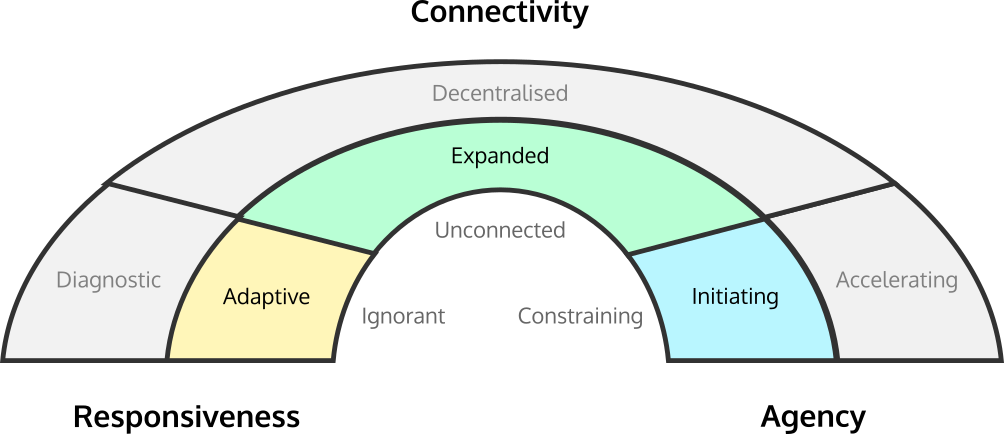

Accelerators tend to score Level 1 (Adaptive) on Responsiveness. They constantly check in with the startup teams to monitor progress, and ask about their big questions. But they tend to be prematurely prescriptive about the solutions to each company's challenges, and if there are individual diagnostics, those are done by individual mentors who have little say in redirecting the program.

Accelerators typically respond by brokering a few select connections and introductions for startup teams via their network. Those select connections take time, so we can rate accelerators level 1 (Expanding) on Connectivity.

If the accelerator is funded by investors, the learners goals are constrained towards maximizing a return on that investment. If it’s funded by a corporation, their goals are constrained within that corporation’s strategy.

Agency is constrained to the goals of the accelerator program, because it will only respond within the boundaries of the program’s predefined goals. But in most cases, accelerators aren't fully constraining, and the startup founders are encouraged to build their own momentum towardsa goal that aligns with the funder, so are effectively (if partially) Initiating Agency (Level 1).

Some accelerators, particularly those run by novices, treat them as compulsory schooling exercises, which limits the agency of the founders they support. When capable founders start to take charge and control their time and run their business, they’re treated as delinquent students and berated for truancy when they don't participate in the heavy training program..

Others recognise that it doesn’t make sense to plan content for the duration of the program, since the immediate needs of each startup will be different.

An approach that can cope with the diversity of stages that and emergent nature in the development paths of startups helps here.

An easy fix is to put placeholder dates in the program for workshops, dinner talks, and other events. Scheduling repeating placeholders makes logistics easier, since there’s no need to constantly schedule events and promote them to the startup teams.

Scheduling interactive events, like AMAs (ask me anything), fireside chats and dinner talks, allows for the founders to choose topics. It also allows the accelerator to invite non-educators with more relevant experience to drop in easily. Clarity on them is created through calibration. Gauging progress with startups, sharing notes on them in a peer review setting, facilitating mentors to share their observations on progress, as an outside opinion, etc. These are all small acts of responsiveness that allow for more tailoring to what the teams actually need.

Barcamps

Barcamps are open conferences, usually hosting several hundred people, that allow the participants to define the schedule of topics in every room. They follow a format called Open Space, which starts with everyone meeting in front of an empty schedule on a board. Everyone has the opportunity to present an idea of for a conference session in front of everyone else, and add it to the schedule. Barcamps have gone through a global popularity wave, having been run in over 350 cities and the largest attended by 6,400 people.

Barcamps sessions tend to be more conversational than typical presentations. The Barcamp culture emphasises including people and de-emphasises social status, and they also favour distributed responsibility - so participants take charge and make improvements or fixes as they feel necessary. Compared to a typical conference, they clearly lack centralised control, and usually feel more "community" than "polished." This takes some adjustment for first-timers, who are usually spotted by someone more experienced and guided along. Experienced barcampers know how to make the most of it, and newcomers quickly figure it out, so Barcamps get full marks for Agency (Accelerating).

To facilitate the definition of the topics that are brought forward by the participants, Barcamps usually supports the learning community with tools for participants to prepare upfront, before the event. This tooling consists of wikis, online forums, and email lists, where people can discuss their ideas for sessions. These all serve participants to take control over their agenda at the Barcamp event. It makes the program highly flexible, and Responsive (Level 2: Diagnostic)

Although most Barcamp events are modest in size, they achieve similar results to much larger conferences. For one, they tend to attract socially-minded technologists, who have a culture of helping others and “making intros” without asking for anything in return. The resulting experience for learners is fully decentralised connectivity.

At first Barcamps are overwhelming, because there are so many parallel sessions running, and walking into any of them usually means finding a room of people in mid-conversation. As each new session begins, a new group forms around similar goals but with different approaches, and meaningful relationships form from them. It all makes for a smoothly flowing swarm of people, and many leave the conference with relationships that they know will last a lifetime (Fully Connected Level 2: Decentralised).

Try it

With this understanding, the ARC should give you a way to evaluate your own program, spot weaknesses and disconnects, and reveal what to do about them.

We’d love to see you try, and talk to you about your evaluation and ideas. Please share yours with us, so we can understand how your program works, and give you some pointers if you’d like.